Python Code Design and Dependency Management

Introduction

This post is going to cover a few tools and features I plan on using when writing Python code in 2019. I’ve grouped these into the following sections:

- Type Hints and Static Analysis

- Interfaces, Protocols and Abstract Methods

- Dependency Management (with pipenv) (OUTDATED! †)

† read my post “Python Management and Project Dependencies”.

But before we get into it... time for some self-promotion 🙊

Type Hints and Static Analysis

Some languages are weakly typed (JavaScript), some are strongly typed (Python) and some are statically typed (Go, Rust). Being strongly typed means you can’t perform operations inappropriate to the type. For example, in Python you can’t add a number typed variable with a string typed variable.

In Python 3.5 we get ’type hints’ which are a way of annotating Python code with type information in a bid to allow for external tools to provide safety similar to what you might see with a statically typed language.

Python actually ignores these annotations so type hints won’t break your code if you specify one expected type but provide a different one at runtime.

Consider the following code snippet, which uses type hint annotations to indicate the types for both a function’s parameters as well as the function’s return value:

def foo(n: int) -> str:

print(f'integer: {n}')

return n

foo('not an integer') # prints 'integer: not an integer'

So we can see that the code is stating we expect our foo function to receive a parameter of type integer but when the function is called it’s actually passed a string. You’ll find this incorrect argument type isn’t going to break your program.

Our example code also expects a string to be returned by the foo function, but we return an integer value. So this also doesn’t break our program.

If you did nothing else at this point, your code would at the very least be very descriptive of the expectations for its use, but what these type hints afford us is the ability to use external tools for handling static analysis.

The most popular tool currently is called ‘mypy’. You can run mypy via the command line, and it also provides integrated support for most code editors. This means you can catch yourself breaking the expectations of your program when writing/editing code.

Type hints by themselves are quite basic and don’t offer much additional contextual information, so Python added a new typing module to allow for more contextual annotations. One nice feature provided by this typing module is the ability to alias types. The following example is copied verbatim from the Python documentation:

from typing import Dict, Tuple, List

ConnectionOptions = Dict[str, str]

Address = Tuple[str, int]

Server = Tuple[Address, ConnectionOptions]

def broadcast_message(message: str, servers: List[Server]) -> None:

...

# The static type checker will treat the previous type signature as

# being exactly equivalent to this one.

def broadcast_message(

message: str,

servers: List[Tuple[Tuple[str, int], Dict[str, str]]]) -> None:

...

I intend to use type hints a lot more in 2019 to help me both identify potential issues in my code during development, as well as being a means of code clarity as to what input/output types are expected.

For more examples of using the typing module, please refer to either the official documentation or this useful tutsplus.com article.

Interfaces, Protocols and Abstract Methods

In the field of programming there are two concepts that can be a bit confusing to understand:

- interfaces

- abstract classes/methods

We’ll cover both of these in the following sections, along with Python’s own ‘protocols’ and ‘abstract base classes’.

In summary: an interface is a contract that defines ‘behaviour’, but has no implementation. An abstract class is an actual class that can define common behaviour (including its implementation) along with abstract methods that have no implementation. The implementation of the abstract methods will be defined by the subclass.

Interfaces

An interface is useful for when you don’t necessarily care for a specific concrete implementation of some functionality and will happily accept any object as long as it abides by the behavioural contract your receiver requires.

For the specific use case of interfaces Python has traditionally relied on ‘duck typing’, which is where a caller provides an object and the receiver will attempt to call the appropriate method on the object (thus trusting the object has a corresponding method available).

Note: this is where the term duck typing comes from “If it walks like a duck and it quacks like a duck, then it must be a duck”. If the provided object has the expected method exposed, then we presume it’s of a suitable type.

In some cases, such as a reusable shared library, it might be preferable to write code defensibly. Meaning you verify the provided object has the interface the receiver is expecting. This is demonstrated in the following example which expects a client object to be provided, and that object needs to be a HTTP client and so must have both a get and a post method.

We could trust the caller has read the documentation and provided an appropriate object, but we don’t want to rely on that, so we manually defend against that failure scenario in our code:

async def execute_fetch(client, endpoint):

"""Make asynchronous requests via given http client."""

if invalid_client_interface(client):

raise tornado.web.HTTPError(500, reason="Invalid HTTP Client")

...

def invalid_client_interface(client):

"""Ensure http client has supported interface."""

if hasattr(client, 'post') and hasattr(client, 'get'):

return False

return True

Python not being a statically typed language means it has no support for traditional ‘interfaces’ but the above example ‘defensive’ code is a way to manually mimic it at runtime (as we have no means to validate this at any other time as there is no compilation step with Python, being it’s a dynamic language).

Protocols

Python provides ‘protocols’ as part of their ‘collections’ module, which are also homed alongside another concept in Python called ‘abstract base classes’ (this is something we’ll look at shortly as ABC’s are designed to play nicely with protocols).

Protocols are similar in spirit to interfaces in other languages, but in practice act more like guidelines (this is because Python is a dynamic language and so strictly speaking it isn’t able to validate code correctness because there’s no compilation step with Python).

In essence a protocol is an interface (it defines expected behaviours), while Python’s ‘Abstract Base Classes’ provide a way to offer a form of runtime safety for the interface.

But to understand ‘protocols’ and how we can utilize either MyPy or Abstract Base Classes with them, you’ll need to know a bit about ‘magic methods’ in Python…

Magic Methods:

If one of your custom defined objects implements specific ‘magic’ methods (e.g. __len__, __del__ etc), then you’ll find a selection of builtin Python functions become available to use on those objects that otherwise those builtin functions wouldn’t necessarily support.

For example, if we implement the __len__ magic method, then our object will be able to utilise the builtin len function.

If we utilize protocols, then we can use mypy along with type hinting to implement a development time interface check.

Consider the following code snippet:

class Team:

def __init__(self, members):

self.members = members

t = Team(['foo', 'bar', 'baz'])

t.members # ['foo', 'bar', 'baz']

len(t) # TypeError: object of type 'Team' has no len()

This code doesn’t work because the len function provided by the Python standard library doesn’t work on custom classes unless the class defines a __len__ magic method.

If we add a __len__ method to the above example (see below), then we would find the Team class now supports the collections.abc.Sized protocol and so the len function will be able to work when given an instance of Team:

class Team:

def __init__(self, members):

self.members = members

def __len__(self):

return len(self.members)

t = Team(['foo', 'bar', 'baz'])

t.members # ['foo', 'bar', 'baz']

len(t) # 3

Now if we want to utilise mypy to help verifying our code at development time, let’s say we have a function that we want to accept any argument type that supports the len function (i.e. anything that supports the collections.abc.Sized protocol), then we can do so using the typing.Sized type (see below example which adds such a function called print_size):

import typing

class Team:

def __init__(self, members):

self.members = members

def __len__(self):

return len(self.members)

t = Team(['foo', 'bar', 'baz'])

def print_size(s: typing.Sized):

print(len(s))

print_size(t)

Notice that in the above example we state that the first argument to print_size should be a type of the typing.Sized, which is actually a mapping to the collections.abc.Sized protocol.

If we use the mypy static analysis tool as part of our application testing process (e.g. we only deploy the code if mypy is happy), then we can feel confident our code will be safe.

This is because if we were ever to change the code in a way where we were passing something to print_size that didn’t support calling len() on it, then the mypy analysis would fail.

Custom Protocols

The Python typing module also let’s you define your own protocols using typing.NewType.

Let’s look at a simple example first to understand the use of NewType:

from typing import NewType

I = NewType('I', int)

def foo() -> I:

return I(123)

Notice a few things in the above example:

- the first argument to

typing.NewTypeneeds to match the name of the variable it is assigned to. - we can’t just return an integer, it needs to be casted to the new type

Ifirst.

Now we have a basic understanding of NewType let’s consider the following example where we create a new custom protocol called CustomProtocol:

import typing

class Team:

def __init__(self, members):

self.members = members

def __len__(self):

return len(self.members)

t = Team(['foo', 'bar', 'baz'])

def print_size(s: typing.Sized):

print(len(s))

print_size(t) # prints '3'

CustomProtocol = typing.NewType('CustomProtocol', Team)

cp = CustomProtocol(Team(['beep', 'boop'])) # <class '__main__.Team'>

print_size(cp) # prints '2'

Note: when we create an instance of

CustomProtocolthe underlying ’type’ isTeam.

The mypy static analysis tool can subsequently be used to verify code for both native protocols and custom protocols, like so (see the type hint annotation added to the print_size function, which mypy is happy with):

class Team:

def __init__(self, members):

self.members = members

def __len__(self):

return len(self.members)

CustomProtocol = typing.NewType('CustomProtocol', Team)

def print_size(s: CustomProtocol): # we could also set type to `Team`

print(len(s)) # prints '2'

cp = CustomProtocol(Team(['beep', 'boop']))

print_size(cp)

Note: the argument type passed to

print_sizeisCustomProtocolwhich doesn’t make mypy complain because the underlying type forCustomProtocolis actually theTeamclass, and the underlyingTeamclass is supporting thetyping.Sizedinterface (which maps to thecollections.abc.Sizedprotocol).

If you want more information on mypy’s support of protocols, I suggest reading their specific documentation here.

Abstract Classes/Methods

An abstract class allows you to define common behaviour as well as ‘abstract methods’ that have no implementation, in which the subclass will be required to provide the implementation.

We can mimic that concept in Python using standard classes along with the classic ’template method’ pattern as shown in the following example:

class MyAbstractClass:

def common(self):

print('common behaviour')

def MyAbstractMethod(self):

raise NotImplementedError

class Foo(MyAbstractClass):

def MyAbstractMethod(self):

print('do something')

class Bar(MyAbstractClass):

pass

f = Foo()

f.common() # prints 'common behaviour'

f.MyAbstractMethod() # prints 'do something'

b = Bar()

b.common() # prints 'common behaviour'

b.MyAbstractMethod() # raises NotImplementedError

o = MyAbstractClass() # not possible in other languages (see note below)

o.common() # prints 'common behaviour'

o.MyAbstractMethod() # raises NotImplementedError

Note: in other languages that support proper abstract classes, you would not be able to instantiate the abstract class directly (like we have done in our example).

Luckily Python does also provide us with what it refers to as ‘Abstract Base Classes’ (here in referred to as ABC’s) which are a form of traditional abstract class, so there’s no need to necessarily mimic the behaviour like in our earlier example. See the following example that demonstrates this feature:

import abc

class Foo(abc.ABC):

@abc.abstractmethod

def bar(self):

pass

class Thing(Foo):

pass

t = Thing() # TypeError: Can't instantiate abstract class Thing with abstract methods bar

To make the above example code work correctly we need our class Thing to actually implement the exepected behaviour (i.e. a bar method). If Thing doesn’t provide the expected behaviour then we can’t instantiate a subclass of Foo.

This also means that if we’re using a static analysis tool such as mypy, we could have a receiver state it expects a type of Thing and know more confidently that Thing will definitely provide the behaviour we need.

It’s important to understand that the use of an abstract class is subtly different to the use of traditional interfaces in that an interface doesn’t rely on a concrete implemention.

For example, our Thing class is a concrete implementation, and so we can’t provide the receiver with a different class (even if the other class also happened to inherit from Foo) as it won’t be equivalent to a Thing type.

Note: the mypy docs have a good detailed breakdown of how to indicate a dependency of a specific class type.

Dependency Management (with pipenv)

UPDATE 2019.12.20: I no longer use Pipenv (as per below). I’ve written an updated version of how best to handle dependencies here.

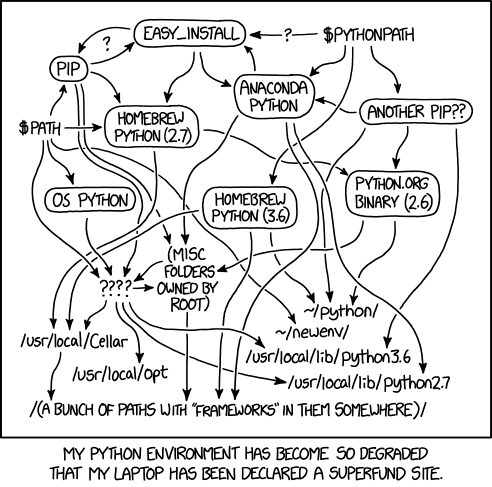

Python has historically utilised a requirements.txt file for defining the dependencies required of your program, but there are various annoying complications that go along with the traditional model of handling dependencies which has meant we have a few new players in the field to help us.

One such concern is the setting up of multiple virtual environments for the various projects we need to work on:

So here are the various alternatives we have to play with in 2019:

I’ll be showing you the last tool in the list: Pipenv.

Although another alternative approach to the specific problem of virtual environments is to utilise docker containers for doing your development, but you’ll need to be comfortable using a terminal editor like Vim (unless you want to jump through some X11 hoops). Using containers also doesn’t eliminate the other issues with determining the right dependencies, so keep reading anyway.

Note: if using Docker with a terminal editor like Vim to solve this problem sounds like a good approach for you, then review an older post of mine that explains how to do that.

Here are the commands necessary to install Pipenv on macOS:

brew install pyenvpip install pipenv

Note: you’ll need Homebrew to install the

pyenvcommand (a sub dependency) usingbrew, and macOS should have Python 2.7.x installed by default so you should have thepipcommand available already.

Here are my quick steps for setting up a new project with Pipenv:

mkdir foobar && cd foobarpipenv --python 3.7

Note: use

pyenv install --listto find out what Python versions are available to install.

Now when working on a Pipenv project:

cd foobarpipenv shellorpipenv run python ./app.py

Note: use the

shellsubcommand to have your current terminal permanently use the chosen Python version (e.g.python ./app.pywill work as if the current Python version is what you’ve defined), otherwise use therunsubcommand to execute the given command (e.g.python ./app.py) within the chosen Python version temporarily.

You can now install dependencies specifically for the project’s specific Python environment:

pipenv install tornado==5.0.2pipenv install --dev mypy tox flake8

Note: if you have an existing

requirements.txtfile, then you can generate a Pipfile from that usingpipenv install -r requirements.txt, alternatively if you need to do the reverse (generate a requirements from a Pipfile):pipenv lock --requirements

Now none of these new tools are perfect, and if you want a good run down of one engineer’s perspective on them, read here.

Conclusion

That’s it. We’ve looked at how to handle dependencies with Pipenv, and how to utilise static analysis tool mypy along with type hinting to give us more confidence in our code (as well as having the code become clearer intent).

Lastly we looked at how to utilise interfaces and abstract classes to help improve the structure and safety of our code.

Along with new additions to the asyncio module (a simpler api for a start) and cleaner abstractions such as the new data classes features, the future of Python hasn’t looked brighter.

But before we wrap up... time (once again) for some self-promotion 🙊